Automated Weapon Systems

Key points of AWS

AI

- AWS uses AI to process info, make decisions and adapt to environment.

- Machine learning.

- Help the systems evolve and react.

Sensors

- Helps capture and interpret the environment to track targets.

Datasets

- Trained on extensive datasets to recognise patterns.

- More data, more it knows.

- Quality of training data can significantly impact performance.

- Training it on real or simulated data? Every war is different, they are not inherent to human nature. It needs to know whats a civilian, a soldier, etc… Bad data→bad system.

Algorithms

- They process data and take actions.

- They are determinant.

Autonomy

- Total human control (manual operation)

- Like a rifle.

- Automation (human in the loop)

- Human is still there, just doing other things.

- Semi-autonomy (human on the loop)

- You oversee

- Full autonomy (human out of the loop)

- You are out

Autonomy is a spectrum.

Why is AWS special?

A definition of what AWS does differently is needed:

- What makes the weapon special compared to a manual one?

- For what is this critical?

- A drone could refuel itself, but it’s not really critical.

- It is critical when the drone controls and engages in situations by itself, fully autonomously.

Its not only one weapon system but different components in different locations that identify, select and engage a target without a human user to execute these tasks.

Domains

It’s autonomous in all domains:

- Aerial AWS.

- Land-Based AWS.

- Naval AWS.

- Space-Based AWS.

- Space-Based AWS.

- Cyberspace.

Examples

Kargu-2 (Turkey)

- AI powered kamikaze drone that has an explosive payload.

- You can also attach other things such as guns to drones.

- Fully autonomous and swarm-capable (facial/thermal imaging).

- It attacks by itself.

- Only autonomy can swarm well.

- Is it really autonomous tho? At first Turkey said yes but when errors arrived it backtracked.

Poseidon (Russia)

- Nuclear-capable autonomous underwater drone (torpedo).

- No human control after launch.

- Can stay in water for very long periods.

- Designed for strategic nuclear strikes.

- Deep sea dominance.

Advantages and risks

Advantages

- No guilt.

- But somebody coded it, designed it, trained it, etc… So is this really true?

- Efficient.

- Force multiplication.

- Adaptable.

- Less losses on our side.

- Avoiding human error.

- Cost reduction.

- Cheaper than humans.

Risk

- Proliferation of misuse.

- No cost to life.

- Dehumanisation

- Easier wars→ push a button.

- Less time and will for negotiation.

- Cyber attacks.

- Guilt dilemma.

- Non-state actors.

- Oppression, war crimes.

- Arms race.

Ethical and human rights

- ?

Evolution of discussions

Human rights council

Christof Heyns raises concerns through his reports:

- Emphasis on human rights.

- Human dignity (right).

- Life and death decisions.

- Ethical angle.

Campaign to stop killerrobots

- Civil society: global coalition of NGOs, activists and experts.

- Want a preemptive ban treaty.

- Prohibit AWS against humans (antipersonal) and without human control.

- Less tolerant on the autonomy spectrum.

CCW on Geneva GGE on LAWS

- 128 states with 5 protocols.

- IHL focus/weapons.

- Consensus - Military and humanitarian.

- Not only humanitarian champions and objectives, also users of weapons such as China and Israel.

- 2014: discussions begin.

- 2016 onwards→GGE and until 2026 3y mandate.

- 2014-6→trying to understand AWS.

- 2017-8→IHL compliance-based approach. We have IHL and we can apply it.

- 2019-21→focus on human control.

- 2022-4→prohibitions and regulations.

- 2024 & beyond→elements of an instrument (binding): rolling text towards a future treaty.

IHL compliance

The consensus is that International Law (specially humanitarian) applies:

- Not in a vacuum.

- Existing international law—especially IHL—already governs AWS; law doesn’t operate in a vacuum. But an IHL-only frame presumes armed conflict and risks normalizing/accelerating weapon development. Think the “Tallinn Manual” effect: clarity, but also legitimation.

- NGOs don’t like this.

- Compliance is key

- We restate relevant IHL rules and principles.

- We apply them to AWS.

- We say what a state has to do / Implement IHL.

- IHL governs conduct and protects civilians and combatants (GC).

- Weapons limited and the use of force must be proportionate, necessary and directed only at lawful military targets.

- Distinction.

- Can AWS fullfill this principle?

- Proportionality.

- Can AWS make this judgement?

- Precaution.

- Can AWS limit harm adjusting their behaviour dynamically?

- Compliance.

- Can you program IHL into a system?

- Accountability.

- Is the developer, manufacturer, commander of operator responsible?

- Who is responsible for violations? The developer, manufacturer, commander, or operator?

- Autonomy vs. Human Control:

- IHL assumes human decision-making — where do autonomous systems fit?

Switzerland’s view

- Two tier approach, prohibit and regulate.

- Tip of the iceberg prohibited and the rest regulated. Only those that can’t comply with IHL should be regulated.

- It is prohibited in all circumstances to develop or use LAWS

- which are inherently indiscriminate, or which are otherwise incapable of being used in compliance with IHL.

- if their effects in attack cannot be anticipated and controlled, as required by IHL in the circumstances of their use.

- it is prohibited in all circumstances to employ LAWS that operate without context-appropriate human control and judgement.

- States need to ensure LAWS:

- are adequately predictable, reliable, traceable and explainable.

- are operated under a responsible chain of command & control.

- And moral ethical and legal consideration is given by a human (when selecting/engaging).

- Ensure types of targets, duration, geographical scope, and scale of the operation is controlled/limited.

Specific technical measures

- Assure that you have specific technical systems that comply with regulations.

- This measures are useful to:

- To reduce specific risks

- To conduct tests, and legal weapons reviews.

- To exchange information: Transparency and confidence-building mechanisms.

Way forward for AWS?

Rolling text

Until 2026 there is an agreement to work on a rolling text as a working document that contains elements for an instrument (legally binding).

Some don’t call it negotiations but developing elements (e.g.: Russia).

Some don’t call it negotiations but developing elements (e.g.: Russia).

Issue on definitions

- Identify and engage→excludes certain systems.

- Human control→every system and situation is different and as such there needs to be context appropriate supervision. It’s not controlling but judging in many cases.

Existing IHL

Is current IHL enough or do we need to develop new rules?

Beyond IHL?

Even after dealing with IHL, what else needs to be regulated?

What type of agreement?

- Arms control? Limits? Types?

- Which limits? Geographical, time, number of attacks, etc…

- should anti-personnel use be prohibited?

- only military objects by nature?

- Export control regime? Components? Dual-use goods?

- Control transfers of dual-use tech (civilian-made, militarily usable): compute chips/accelerators, model weights/autonomy software, EO/IR/radar/LiDAR sensors, IMUs/GNSS-denied nav, RF/SATCOM, swarming/control software, datasets/simulators

- Agree, codify ethical rules?

- Where to be able to use those weapons?

Nature of instrument

- politically binding instrument?

- principles.

- best practices.

- rules of the road.

- legally-binding instrument?

- a CCW Protocol? (Protocol VI).

- a UN treaty? (like Treaty Prohibiting Nuclear Weapons).

- a Treaty outside the UN (Ottawa Treaty: Mines).

Succes?

Or failure? Continue in the CCW if it doesn’t work? We need US, RSS and China… if not who will go outside? Internal and external developments have to be considered.

Alternatives to GGE, in Geneva or New York? What if big AWS powers are not included?

Role of the UNGA in NYC

- In Geneva consensus=de facto veto.

- Everyone slowly or just a few fast?

- Dilemma of scope: more than IHL?

- Ongoing discussions in the UNGA First Committee 2025.

The UNGA passed a resolution to put a bit of pressure on the Geneva process.

The UNGA passed a resolution to put a bit of pressure on the Geneva process.

Beyond AWS: AIMD

- AWS is one key core concern.

- But AI in Military Domain much broacher.

- AIMD (Artificial Intelligence in the Military Domain).

- Logistics.

- Decision support systems.

- Command and control of nuclear weapons (NC3).

- Intelligence, surveillance, reconnaissance (ISR).

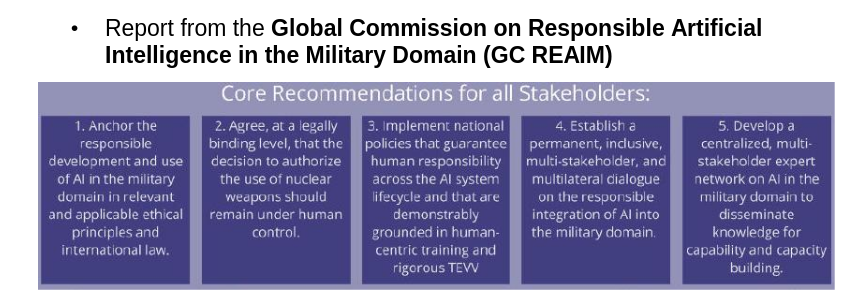

Summit process: REAIM

- Hague 2023→call to action and global commission.

- Seoul 2024→blueprint for action, raise awareness.

- A Coruña 2026→soon.

- Capacity building.

- Not scaring people off, except RSS big powers will be there (USA and China).

Report

So?

- Bring AIMD into UNGA to get the broadest possible support.

- UNGA resolution 2024→launch and UNSG report (September 2025).

- To know what we need, to sensibilise.

- UNGA resolution 2025→launch informal discussions in Geneva in 2026.

Other issues

Ottowa avenue

- Positive, some move forward and then other follow.

- But these high standards are then not respected during wars.

- And accountability organs such as the SC are in a difficult time.

Two tier approach

We could get to a war in the future where we are more accurate and there are less accidents. But AI moves quickly, regulation can get old quickly.

Summary

- Massive AWS/AI development in the next 10-20 years.

- Agreeing on such a technical issue is a challenge.

- Case by case approaches.

- Everybody wants to but don’t wants to fall behind.

- Start from soft (politically binding) and go to hard (legally binding) norms.

- No specific rules, but no vacuum either→IL/IHL apply.

- Work towards a regulation dealing with specific challenges it poses

- Beyond regulation: Responsible Behaviour – Soft Norms.